AI: Deepest Lessons from DeepSeek. RTZ #615

...core takeaways that will accelerate AI companies big and small, if applied

The DeepSeek impact crater widens as it were, intellectually speaking. Amidst all the debates over US/China geopolitics, Big AI Capex implications, and impact on business models of big tech companies, there are three deep lessons I take away thus far from DeepSeek’s path to these groundbreaking innovations.

Mind you, for now, they’re available for the world to ‘copy and/or emulate’ as they see fit, both in terms of software and hardware. They the world over, misses key opportunities in this AI Tech Wave going forward, IF THEY DON’T.

Here are the FOUR deepest lessons as I see them right now:

1. Transparency matters:

Everyone knows and generally lauds that DeepSeek has pursued an Open Source path with its LLM AI v3, Reasoning AI product R1, and now Multimodal AI Janus-Pro releases, with their key under the hood breakthrough technical innovations.

And the founder Liang Wenfeng, has a deep commitment to that approach for years now. So presumably it will continue. But that open source approach was ONLY the beginning. The overarching principal was Transparency built into the product at its core. It went on to ‘delight’ its users, especialy of R1, its AI Reasoning Product.

As Ben Thompson of Stratechery notes in his must-read follow up analysis on DeepSeek, this approach worked relative to OpenAI’s decision to NOT show the way its o1 and now o3 models did their ‘AI Reasoning’ via their ‘chains of thought’ calculations (Bolding mine):

“DeepSeek R1, which does show its chain of thought, has shown that this was a mistake.”

“Exposed chain-of-thought teaches users how to do better prompting, because they can see exactly where the AI gets confused. It’s a superior product experience for this reason alone.”

“Exposed chain-of-thought increases trust in the model, because you gain an understanding of how it is arriving at the answer.”

“Exposed chain-of-thought is just cool and endearing. One thing that was interesting with the normie discovery of

R1is how delightful they found the experience, and keep in mind, most of these folks are probably on the free tier of ChatGPT, which means they didn’t even have access too1at all (but they will now).”

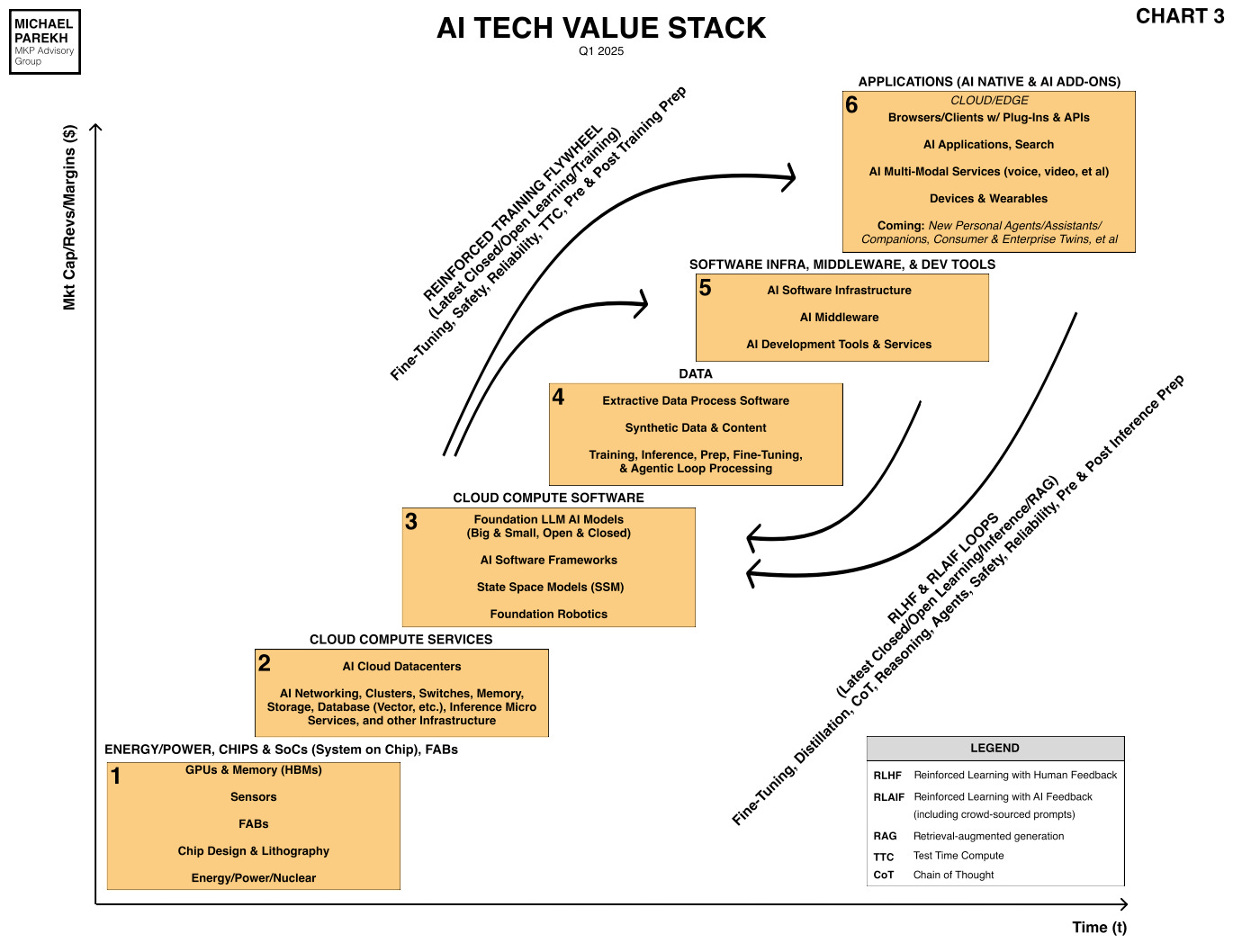

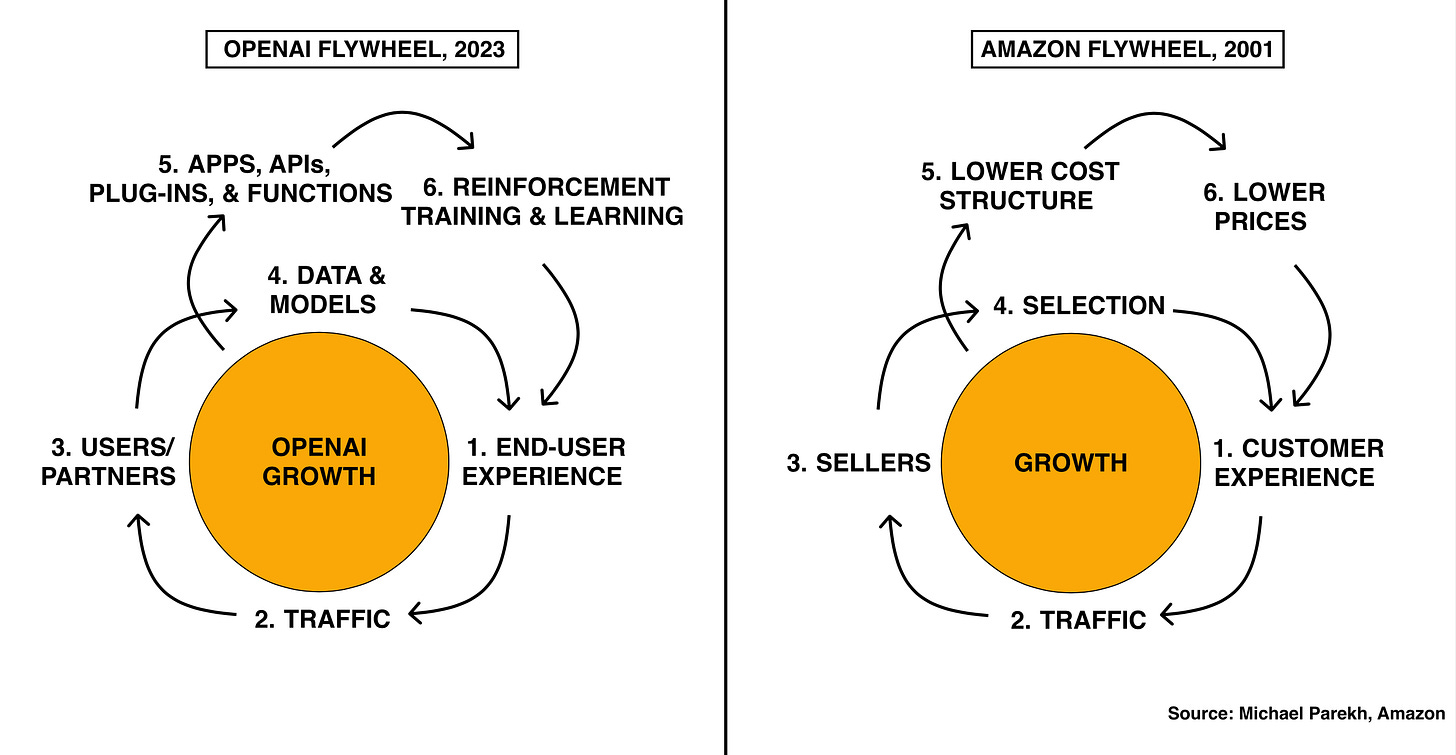

I’ve discussed ‘Chain of Thought’ innovations as part of the ‘Reinforced Learning’ technologies, key in the AI Tech Stack this time around (see Legend below):

That transparency on how the model does its ‘reasoning’ thing, is useful particularly for end users. And needs to be exposed for that reason alone. Not held back for competitive reasons relative to peers and customers building off the core models.

A key deep lesson from DeepSeek.

2. Constraints matter:

Tech History through various waves has always shown us that founders, engineers and entrepreneurs do amazing things through constraints. Whether it’s natural constraints of capital or technology, entrepreneurs come up with amazing solutions to iterating improvements and innovations.

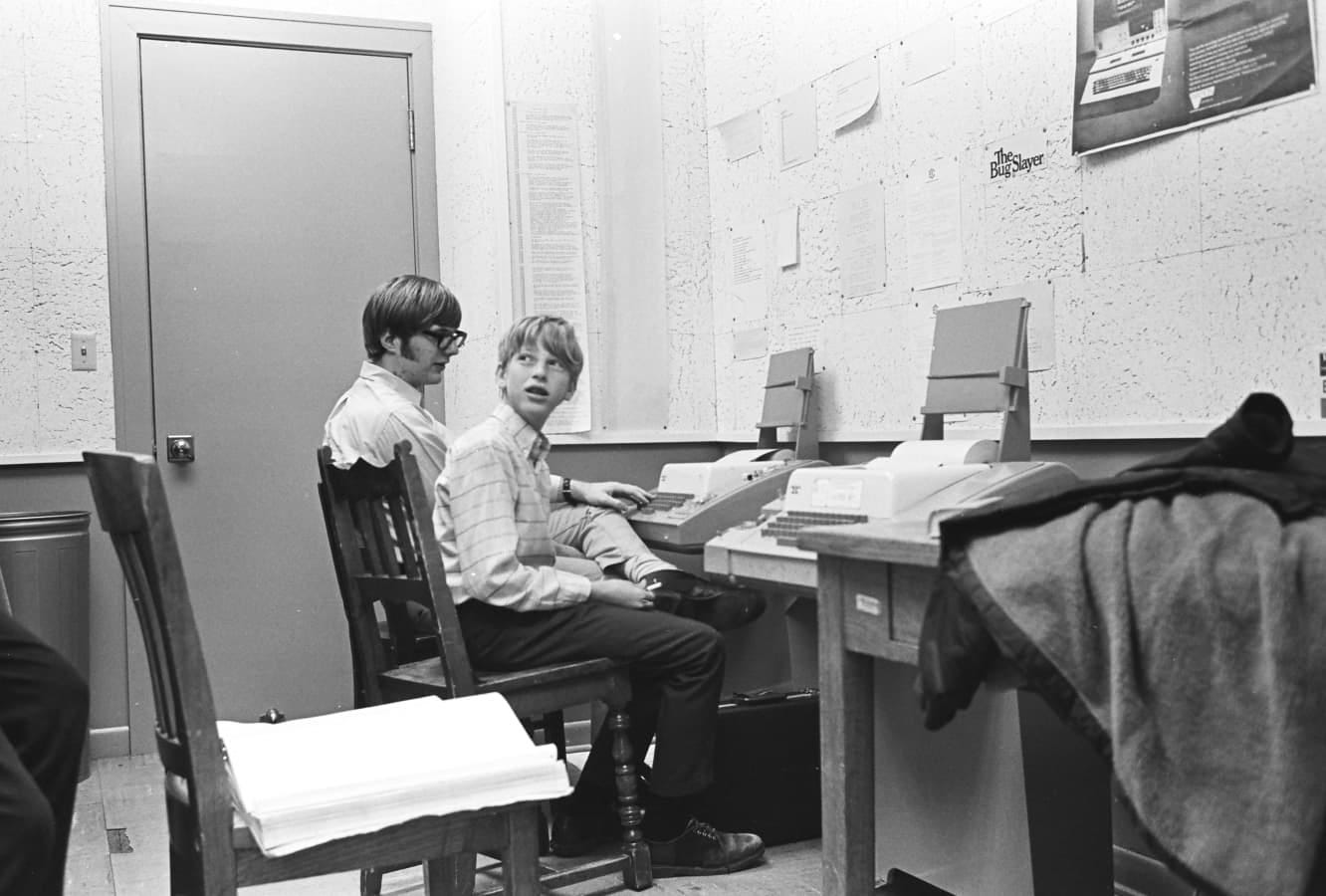

We saw it in the PC wave of the late 1970s and early 1980s. Bill Gates, who has a new book out called Source Code, on his early days, tells this ‘on point’ story from his formative days:

“Around that time, someone had lent Lakeside a computer called a PDP-8, made by Digital Equipment Corp. This was 1971, and while I was deep into the nascent world of computers, I had never seen anything like it. Up until then, my friends and I had used only huge mainframe computers that were simultaneously shared with other people. We usually connected to them over a phone line or else they were locked in a separate room.”

“But the PDP-8 was designed to be used directly by one person and was small enough to sit on the desk next to you. It was probably the closest thing in its day to the personal computers that would be common a decade or so later—though one that weighed 80 pounds and cost $8,500. For a challenge, I decided I would try to write a version of the Basic programming language for the new computer.”

“Before the hike I was working on the part of the program that would tell the computer the order in which it should perform operations when someone inputs an equation, such as 3 (2 + 5) x 8 − 3, or wants to create a game that requires complex math. In programming that feature is called a formula evaluator.”

“Trudging along with my eyes on the ground in front of me, I worked on my evaluator, puzzling through the steps needed to perform the operations. Small was key. Computers back then had very little memory, which meant programs had to be lean, written using as little code as possible so as not to hog memory. The PDP-8 had just six kilobytes of the memory a computer uses to store data that it’s working on.”

“I’d picture the code and then try to trace how the computer would follow my commands. The rhythm of walking helped me think, much like a habit I had of rocking in place.”

“Like the famous line “I would have written a shorter letter, but I did not have the time,” it’s easier to write a program in sloppy code that goes on for pages than to write the same program on a single page. The sloppy version will also run more slowly and use more memory. Over the course of that hike, I had the time to write short.”

“By the time school started again in the fall, whoever had lent us the PDP-8 had reclaimed it. I never finished my Basic project. But the code I wrote on that hike, my formula evaluator—and its beauty—stayed with me.”

“Three and a half years later, I was a sophomore in college not sure of my path in life when Paul Allen, one of my Lakeside friends, burst into my dorm room with news of a groundbreaking computer. I knew we could write a Basic language for it; we had a head start.”

“The first thing I did was to think back to that miserable day on the Low Divide and retrieve from my memory the evaluator code I had written. I typed it into a computer, and with that planted the seed of what would become one of the world’s largest companies and the beginning of a new industry.”

I cite this story to draw a line from Bill’s experience to that of DeepSeek founder Liang Wenfeng, and his 200 employees. They similarly had the constraints of access to Nvidia (US) AI GPU chips in the quantities they would have had if they were anything but a tech startup in China. Through all the ‘threading the needle’ efforts of the US/China tech trade relationship.

So they did what engineers do. Figured out how to do Far more with Far Less. Make necessity the mother of invention etc. The many technical innovations of their approach have taken the breath of leading AI software engineers worldwide. But it came to be as a result of constraints.

Geopolitical ones in this case. An AI ‘Sputnik’ moment as pithily stated by Marc Andreessen, driving the current ‘AI Space Race’ with China for years now. That led to the misguided Biden administration AI Diffusion Rules of the last administration.

The unintended consequences of all these constraints of Tech Chip curbs on China, is DeepSeek. And many others like it to come.

Innovation thrives under Constraints.

From budding founders to entire countries, to life itself, their nature always ‘Finds a Way’. Jurassic Park style.

This is a key Deeper takeaway from the DeepSeek moment.

3. Pricing Matters:

In this translated interview with DeepSeek founder Liang Wenfeng, who ran a quant hedge fund with over $8 billion in assets since 2015, it’s clear that he believes in both turning a profit, and keeping it low relative to underlying costs.

Before DeepSeek became famous outside China in the last few weeks, it’s been famous for at least a year in China for its aggressive pricing vs other LLM AI companies. China’s AI market is hyper competitive, with large player like Alibaba, Baidu and others. And DeepSeek has managed to more than hold its own with its low cost pricing, driven by their product innovations, to lower their prices aggressively in China for end users.

This comes through in the founder’s philosophy on pricing:

“After DeepSeek V2’s release, it quickly triggered a fierce price war in the large-model market. Some say you’ve become the industry’s catfish.”

“Liang Wenfeng: We didn’t mean to become a catfish — we just accidentally became a catfish. [Translator’s note: This is likely a reference to Wong Kar-wai’s new tv show 王家卫 “Blossoms Shanghai” 繁花, where catfish are symbolic of market disruptors due to their cannibalistic nature.]”

“Waves: Was this outcome a surprise to you?”

“Liang Wenfeng: Very surprising. We didn’t expect pricing to be so sensitive to everyone. We were just doing things at our own pace and then accounted for and set the price. Our principle is that we don’t subsidize nor make exorbitant profits. This price point gives us just a small profit margin above costs.”

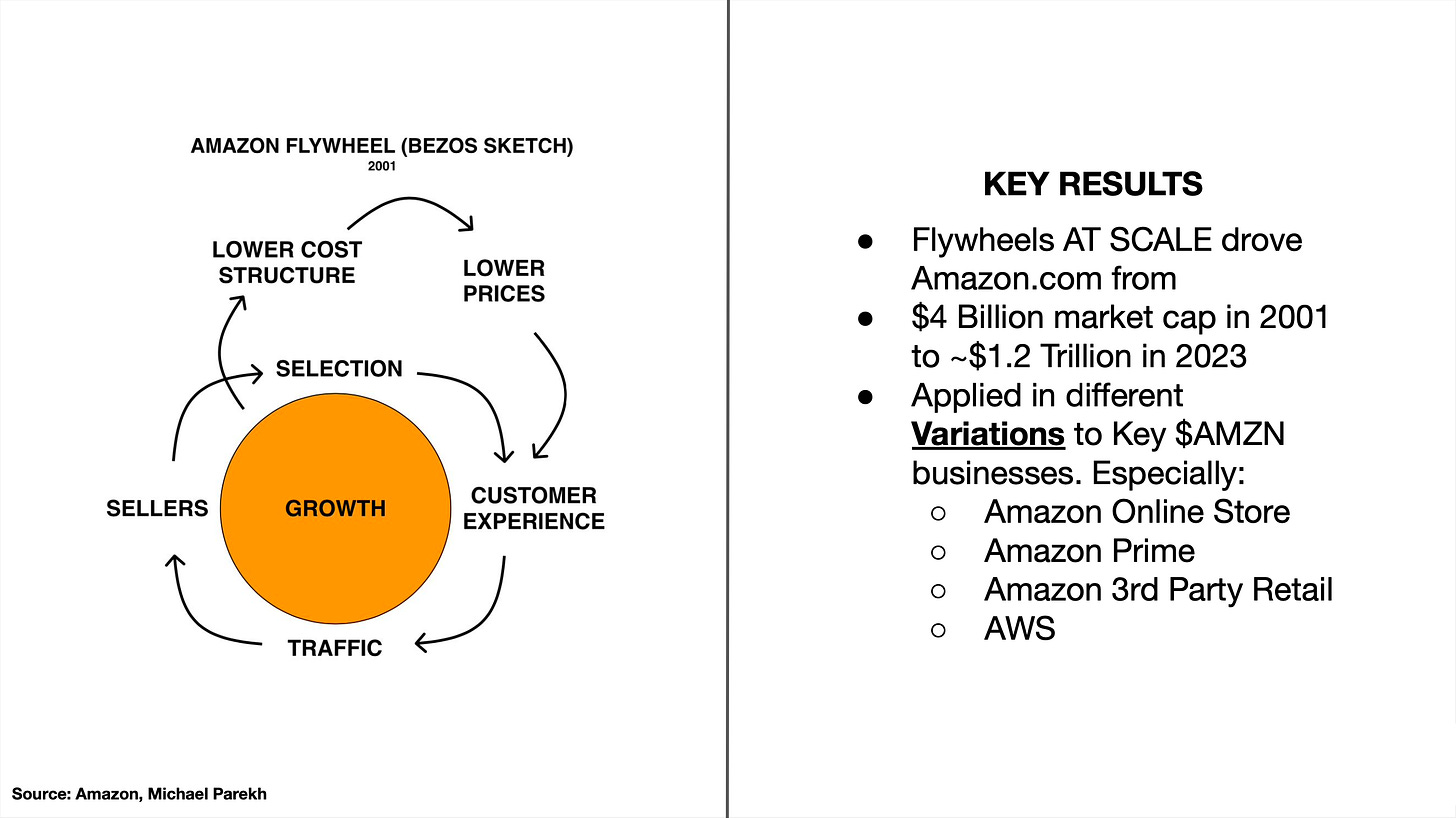

Low pricing to create Flywheels is not new. Amazon, inspired by Costco did that to reach Internet greatness.

And OpenAI is very much focused on the same for its flywheel.

But the deeper takeaway here is that DeepSeek really figured out a way to do low pricing WITHIN its costs early on relative to competitors. A feat easier with software than in the physical world of warehouses, and now AI Data Centers.

It’s a DeepSeek takeaway worth noting.

3. Competition with minimal Politics matters:

Another takeaway from Liang Wenfeng, is that he is a Chinese entrepreneur increasingly endangered in China Premier Xi Jinping’s style of governance. Xi’s crackdown starting with Alibaba founder/CEO Jack Ma, and many others since then, has curtailed China’s companies ability to truly be all they could be.

There’ve been exceptions of course like Bytedance founder/CEO Zhang Yiming, who I wrote about recently in the context of its subsidiary TikTok, and the pending US TikTok ban.

These and many other China’s founders, especially in tech, march to their own drummer. Their core instincts on what to build and how to sell it around the world, allowed them to blaze fascinating trails. See Shein, Temu, and others. In the best tradition of US founders and entrepreneurs. With as little politics as possible.

China’s top tech founders took their cue from US founders/heroes, much like Bytedance’s Zhang Yiming did in the 2010s from US visits to Silicon Valley, and decided he could compete globally from China.

DeepSeek’s Liang Wenfeng seems cut from the same cloth, having closely tracked the US’s most famous ‘Quant’ investor and Renaissance Technology hedge fund founder Jim Simons. As Liang built his multi-billion quant investment hedge fund in China, a decade ago. And is now closely tracking US AI leaders like OpenAI, Anthropic, Meta and others, to build and grow DeepSeek.

He and these other Chinese entrepreneurs remind us that the world is a better place when founders and companies can compete in as open a global playing field as possible.

And of course, China itself IS NOT an open playing field within China since most US tech companies that are not Apple and Tesla cannot compete there.

But the principle is the same. The more open US companies can compete globally with fewer China curbs, the better off they and we will all be.

Thus DeepSeek’s ability to do ‘Far More with Far Less’ is a key Deeper Take from their success. They likely did everything they did with relatively low government awareness and interference their end. That may now change since they’re front and center with their ‘overnight global success’.

But for now, the three deeper takes are clear. Transparency, Constraints, Low Prices driven by Scaling Flywheels, and Low Politics Competition, all matter more than has been assumed thus far in this AI Tech Wave. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)

Thank you for all your great knowledge